150+ citations for the paper, "A Survey of Decision Fusion and Feature Fusion Strategies for Pattern Classification"; Utthara Gosa Mangai, Suranjana Samanta, Sukhendu Das and Pinaki Roy Chowdhury; IETE Technical Review, Vol. 27, No. 4, pp. 293-307, July-August 2010. ![]()

Temporal Coherency based Criteria for Predicting Video Frames using Deep Multi-stage Generative Adversarial Networks ![]()

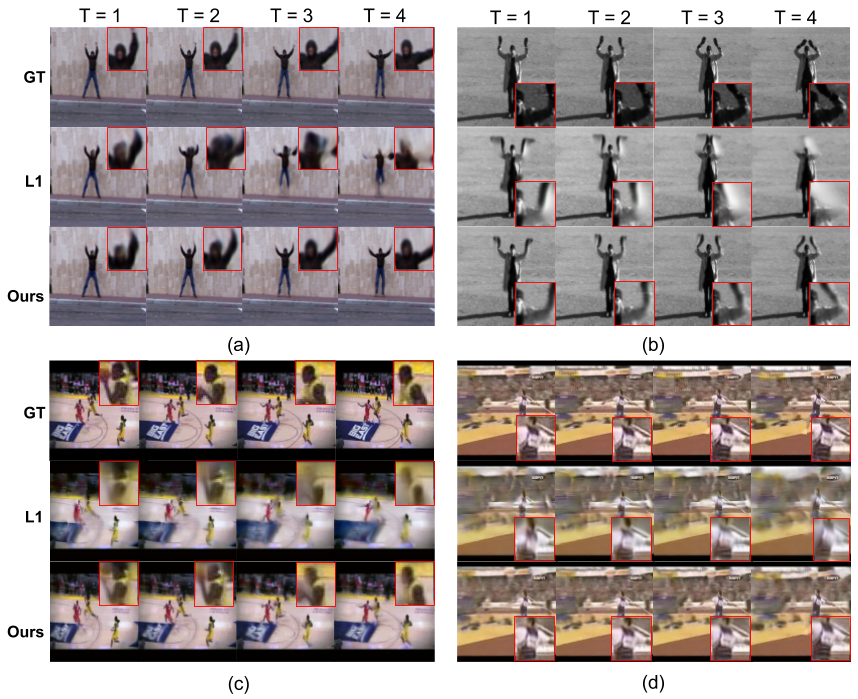

Predicting the future from a sequence of video frames has been recently a sought after yet challenging task in the field of computer vision and machine learning. Although there have been efforts for tracking using motion trajectories and flow features, the complex problem of generating unseen frames has not been studied extensively. In this paper, we deal with this problem using convolutional models within a multi-stage Generative Adversarial Networks (GAN) framework. The proposed method uses two stages of GANs to generate crisp and clear set of future frames. Although GANs have been used in the past for predicting the future, none of the works consider the relation between subsequent frames in the temporal dimension. Our main contribution lies in formulating two objective functions based on the Normalized Cross Correlation (NCC) and the Pairwise Contrastive Divergence (PCD) for solving this problem. This method, coupled with the traditional L1 loss, has been experimented with three real-world video datasets viz. Sports-1M, UCF-101 and the KITTI. Performance analysis reveals superior results over the recent state-of-the-art methods.

Project Page

Related Publication

"Temporal Coherency based Criteria for Predicting Video Frames using Deep Multi-stage Generative Adversarial Networks", Prateep Bhattacharjee and Sukhendu Das; 31st Conference on Advances in Neural Information Processing Systems (NIPS) [A* | h5-index - 101], Long Beach, California, United States of America, 2017.

MST-CSS (Multi-Spectro-Temporal Curvature Scale Space) representation for Content Based Video Retrieval (CBVR)

A novel spatio-temporal feature descriptor has been proposed to efficiently represent a video object for the purpose of content- based video retrieval (CBVR). Features from spatial along with temporal information are integrated in a unified framework for the purpose of retrieval of similar video shots. A sequence of orthogonal processing, using a pair of 1-D multiscale and multispectral filters, on the space-time volume (STV) of a video object (VOB) produces a gradually evolving (smoother) surface. Zero- crossing contours (2-D) computed using the mean curvature on this evolving surface are stacked in layers to yield a highly corrogated (hilly) surface. This gives a joint multispectro-temporal curvature scale space (MST-CSS) representation of the video object. Peaks and valleys (saddle points) are detected on the MST-CSS surface for feature representation and matching. Computation of the cost function for matching a query video shot with a model involves matching a pair of 3-D point sets, with their attributes (local curvature), and 3-D orientations of the finally smoothed STV surfaces. Experiments performed with simulated and real-world video shots using precision-recall metric, shows enhanced performance.

Related Publication

"MST-CSS (Multi-spectro-temporal Curvature Scale Space), a novel spatio-temporal representation for content-based video retrieval"; A. Dyana and Sukhendu Das; IEEE Transactions on Circuits and Systems for Video Technology (Impact Factor: 1.819), Vol. 20, No. 8, pp. 1080-1094, August 2010. (Google Scholar Citations: 12)

VIDLOOKUP

This demo shows a live content-based video retrieval (CBVR) system with web-based interface, for searching video shots with similar content as query. Combination of low-level cues: shape, motion, color features are used to retrieve video shots with similar foreground moving objects. A sketch-based query of object silhoutte and trajectory can also be given as input to the system using a GUI interface.

Related Publication

"VIDLOOKUP - a prototype demo on CBVR", selected among the top 12 demos at International Conference on ICCV-2011, Barcelona, Spain; Contributors - Chiranjoy Chattopadhyay, Sukhendu Das, A. Dyana, Sasi Inguva.

SUBBAND FACE

In this paper, a novel representation called the 'subband face' is proposed for face recognition. The subband face is generated from selected subbands obtained using wavelet decomposition of the original face image. It is surmised that certain subbands contain information that is more significant for discriminating faces than other subbands. The problem of subband selection is cast as a combinatorial optimization problem and genetic algorithm (GA) is used to find the optimum subband combination by maximizing Fisher ratio of the training features. The performance of the GA selected subband face is evaluated using three face databases and compared with other wavelet-based representations.

Related Publication

"Selection of Wavelet Subbands using Genetic Algorithm for Face Recognition"; Vinod Pathangay and Sukhendu Das; ICVGIP' 06, Madurai, India, Dec 13-16, LNCS 4338, pp. 585-596, 2006.

Best paper award at National Conference on Image Processing (NCIP '05) awarded to Vinod Pathangay and Sukhendu Das for their work published in the paper titled "Exploring the use of selective Wavelet Subbands for PCA based Face Recognition", March 2005, Bangalore, India.

EIGEN DOMAIN TRANSFORMATION (EDT)

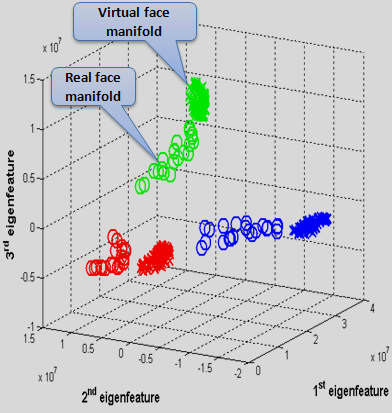

We propose a method to exploit the uniqueness in the illumination variations on the face image of a subject for face verification. Using the 3D wireframe model of a face, illumination variations are synthetically generated by rendering it with texture to produce virtual face images. When these virtual and a set of real face images are transformed in eigenspace, they form two separate clusters for the virtual and real-world faces. In addition, the cluster corresponding to set of virtual faces for any subject is more compact compared to real face image cluster. Therefore, we take the virtual face cluster as the reference and find a transformation that takes real face features closer to the reference virtual face cluster. We propose subject-specific transformations that relight the real face feature in eigenspace into a more compact virtual face feature cluster. This transformation is computed and stored during training. During testing, subject-specific transformations are applied on the eigen-feature of the query face image, before computing the distance from the reference cluster of the claimed subject.

Related Publication

"Eigen-domain Relighting of Face Images for Illumination-invariant Face Verification"; Vinod Pathangay and Sukhendu Das; IEEE ICAPR 2009, Kolkata, India, February 4-6, 2009, pp. 437-440.

ESS

Work under review

GAME DESCRIPTION LANGUAGE (GDL)

We designed a Game Description Language (GDL) for game development. Any game comprise of a set of scenes and their animation (moving characters, objects etc.) properties. We use GDL based rules for describing the game. The game can be customized by any inexperienced user through a GDL file. A GDL file has XML-like syntax, which a user can easily modify to customize the game by a mere change of parameters. A parser or interpreter has also been designed for the purpose of transforming a user defined GDL file into a parametric scene file, which is used by the game engine modules for rendering a scene.