Geethu Miriam Jacob and Sukhendu Das

Visualization and Perception Lab

Department of Computer Science and Engineering, Indian Institute of Technology, Madras, India

Accepted in Asian Conference on Computer Vision (ACCV-2018)

Abstract

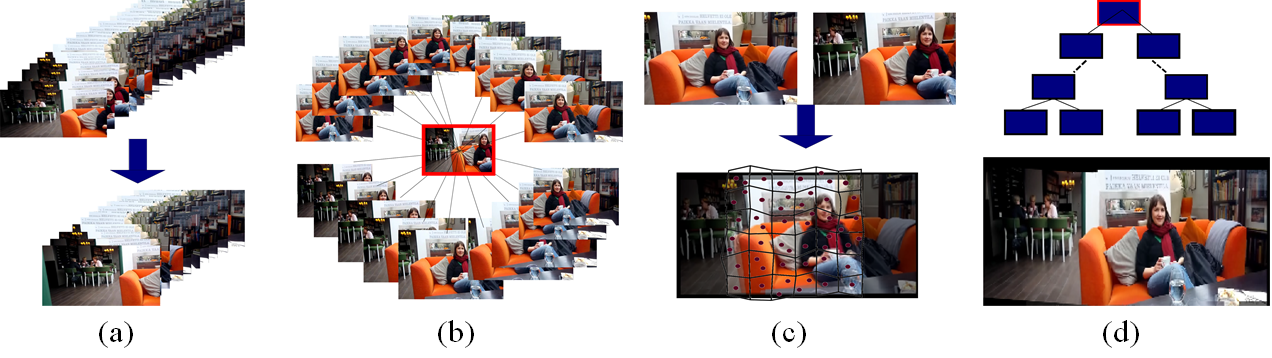

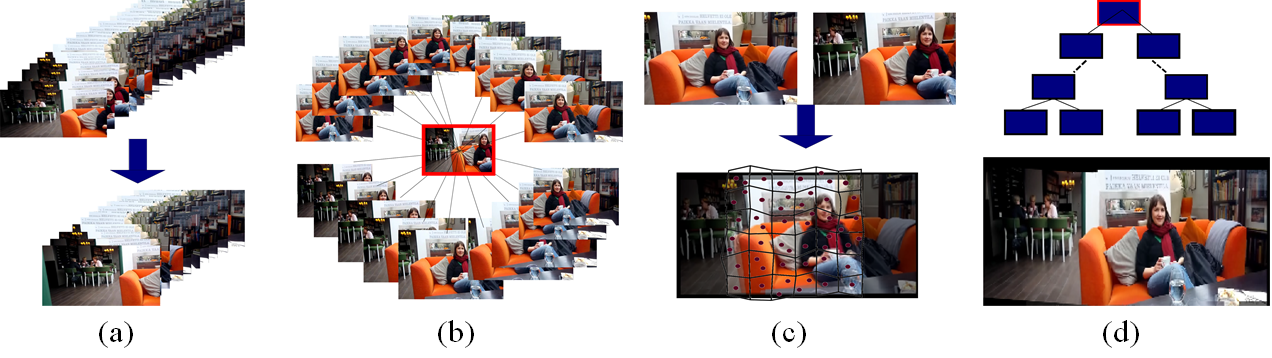

Panorama creation from unconstrained hand-held videos is a challenging task due to the presence of large parallax, moving objects and motion blur. Alignment of the frames taken from a hand-held video is often very difficult to perform. The method proposed here aims to generate a panorama view of the video shot given as input. The proposed framework for panorama creation consists of four stages: The first stage performs a sparse frame selection based on alignment and blur score. A global order for aligning the selected frames is generated by computing a Minimum Spanning Tree with the most connected frame as the root of the MST. The third stage performs frame alignment using a novel warping model termed as DiffeoMeshes, a demon-based diffeomorphic registration process for mesh deformation, whereas the fourth stage renders the panorama. For evaluating the alignment performance, experiments were first performed on a standard dataset consisting of pairs of images. We have also created and experimented on a dataset of 20 video shots for generating panorama. Our proposed method performs better than the existing state-of-the-art methods in terms of alignment error and panorama rendering quality.

Proposed Framework

Downloadable Files

Additional Results