| Home | Aerospace | Biology | Chemical | Computers | Mathematical | Mechanics |

Schedule Summary

November 24

Clicking on a link in this table would take you directly to the meeting.

| 09:00 | S Sundar | A shock-capturing meshless geometric conservation weighted least square method for solving shallow water equations |

| 09:30 | Sashi Kumaar Ganesan | GPU-Accelerated Parallel Algebraic Multigrid Solver |

| 10:00 | Panchatcharam M | GPU Accelerated Computing for Cancer Treatment |

| 10:30 | Aditya Konduri | An overview of scalable asynchronous PDE solvers |

| 11:00 | Nagaiah Chamakuri | Challenges for large scale simulation of cardiac electrophysiology |

| 11:30 | Soumyendu Raha | Sparsification of Reaction-Diffusion Dynamical Systems in complex networks |

| 12:00 | Sathish Vadhiyar | Pipelined Preconditioned Conjugate Gradient Methods for Distributed Memory Architectures |

| 13:00 | Lunch Break | |

| 14:00 | Deepak Subramani | Onboard routing of autonomous underwater vehicles: From PDEs to Deep Learning |

| 14:30 | Ratikanta Behera | Tensor Computations with Applications |

| 15:00 | Jim Thomas | Modeling fluid dynmaics of the world's oceans |

| 15:30 | Phani Sudheer Motamarri | Towards fast and accurate quantum modeling of materials on extreme-scale architectures for accelerated materials discovery |

| 16:00 | Kandappan | Hierarchical low-rank structures on distributed systems |

Our Esteemed Speakers

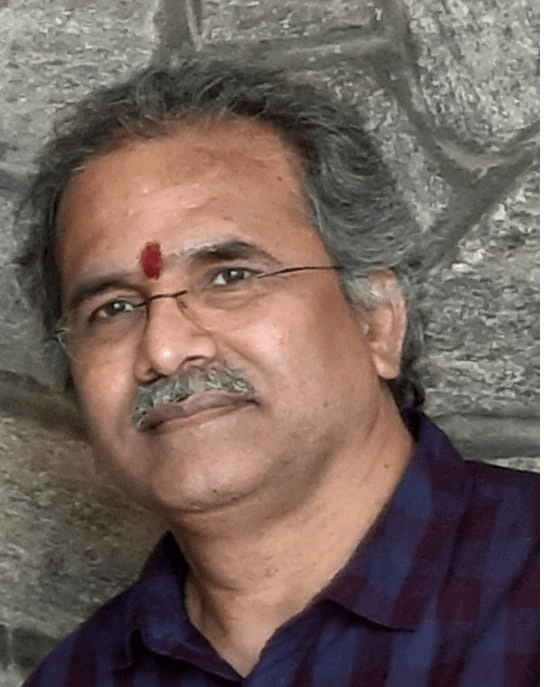

| Keynote Address on November 24 at 09:00 | |

S Sundar IIT Madras and NIT Mizoram | A shock-capturing meshless geometric conservation weighted least square method for solving shallow water equations The shallow water equations are numerically solved to simulate free surface flows in two-dimension (2D). The convective flux term in the shallow water equations needs to be discretized using a Riemann solver to capture shocks and discontinuity for certain flow situations such as hydraulic jump, dam-break wave propagation, or bore wave propagation. The approximate Riemann solver can capture shocks and is popular for studying open channel flow problems with the traditional mesh based methods. However, meshless methods can work on structured and unstructured grids and even for points irregularly distributed over a computational domain. Moreover, approximate Riemann solvers is not reported to be implemented within the framework of meshless methods for solving the shallow water equations. Therefore, we have proposed a numerical method, namely, a shock-capturing meshless solver for the shallow water equations for simulating 2D flows on a highly variable topography even in presence of shocks and discontinuity. The HLL (Harten-Lax-Van Leer) Riemann solver in the proposed meshless method is used to evaluate convective flux. The spatial derivatives in the shallow water equations and the reconstruction of conservative variables to calculate flux terms are computed using a geometric conservation weighted least square (GC-WLS) approximation. The proposed meshless method is tested for a range of numerically challenging problems and laboratory experiments. Author Bio: S. Sundar is from Indian Institute of Technology Madras, who is currently the Director of NIT Mizoram. He is a Professor of Mathematics and he was Head of the Department of Mathematics, IIT Madras during the period 2017- 2020. He is the DAAD (German Academic Exchange Service) Research Ambassador since 2018. He is Distinguished Alumni of TU Kaiserslautern, Germany and Alumni Ambassador of the City Kaiserslautern, Germany. He was the Chairman, JEE (Advanced) 2015, IIT Madras and prior to that he was the Chairman, HSEE 2014, IIT Madras. He was one of the leads in bringing Joint Seat Allocation Portal for IITs and NITs in the year 2015. He is a Member of Program Advisory Committee (Mathematical Sciences), DST-SERB. Prof.Sundar was the Member of Faculty Council, IIT Madras Research Park during the period 2012 – 2020. He is the Associate Editor of International Journal of Advances in Engineering Sciences and Applied Mathematics, Springer. He is also Editorial Member of Journal of Indian Mathematical Society and Journal of Indian Academy of Mathematics. He was the key in establishing Centre of Excellence in Computational Mathematics and Data Science, Department of Mathematics, IIT Madras supported under the Institute of Eminence, GoI. His area of research includes Numerics for Partial Differential Equations (PDEs), Mathematical Modeling and Numerical Simulation. He has on his credit over 70 peer reviewed research publications. He has guided 17 PhDs and currently 8 research scholars are working under his guidance. He has guided over 150 MTech research projects and currently over 10 MTech students are pursuing their research projects. He has active contributions to Indian Academy of Mathematical Modeling and Simulation as a Fellow, His rich experience as visiting professor to various Universities across the globe shows his research potentiality. His interest for recent technologies in the field of mathematical modelling is reflected through his deep collaboration with some of the top schools of technical universities in Germany and in general, across the globe. |

| November 24 at 09:30 | |

Sashi Kumaar Ganesan IISc Bangalore | GPU-Accelerated Parallel Algebraic Multigrid Solver Hybrid CPU-GPU algorithms for Algebraic Multigrid methods (AMG) are presented to effectively utilize both CPU and GPU resources. Specifically, a hybrid AMG framework is developed, focusing on minimal GPU memory usage while maintaining performance on par with GPU-only implementations. This hybrid AMG framework can be tuned to operate with significantly lower GPU memory, enabling the solution of larger algebraic systems. By combining this hybrid AMG framework as a preconditioner with Krylov Subspace solvers like Conjugate Gradient and BiCG methods, a comprehensive solver stack is created to address a wide range of problems. The performance of the proposed hybrid AMG framework is analyzed across an array of matrices varying in properties and size. Additionally, the performance of these CPU-GPU algorithms is compared with GPU-only implementations, demonstrating their considerably lower memory requirements. Author Bio: Sashi Kumaar Ganesan is a Professor and Chair, Department of Computational and Data Sciences (CDS), Indian Institue of Science (IISc), Bangalore. He joined IISc in 2011 as an Assistant Professor. Before joining the institute, he was a Research Associate at Imperial College London and an Alexander-von-Humboldt fellow at WIAS Berlin. He received Ph.D. from Otto-von-Guericke University, Germany. His research group, focuses on Finite element analysis, Scientific Computing & Machine Learning and High-Performance Computing. He is also a founder of , Zenteiq Edtech Pvt. Ltd., a deep tech start-up incubated at FSID, IISc. |

| November 24 at 10:00 | |

Panchatcharam M IIT Tirupati | GPU Accelerated Computing for Cancer Treatment In this presentation, our focus revolves around a pivotal aspect of cancer treatment protocols, emphasizing the imperative to streamline computational processes in order to enhance the efficiency of treatment outcome predictions. The utilization of state-of-the-art Graphics Processing Units (GPUs) stands as a transformative solution, enabling the reduction of prediction time from the conventional 6 hours to a mere 2 minutes for a one-hour treatment protocol. The primary objective entails the development of a rapid finite element solver and Joule heat solver, paving the way to accurately forecast both the quantity and volume of deceased cells in the vicinity of the tumor. This technological advancement not only accelerates the predictive analytics but also holds great promise for optimizing cancer treatment strategies. Author Bio: Panchatcharam Mariappan is a faculty member at IIT Tirupati. He completed PhD from IIT Madras and TU Kaiserslautern, Germany. His research interests include Numerics of PDE, CFD, GPU Computing and Heat Transfer. |

| November 24 at 10:30 | |

Aditya Konduri IISc Bangalore | An overview of scalable asynchronous PDE solvers Numerical simulations of physical phenomena and engineering systems, governed by non-linear partial differential equations, demand massive computations with extreme levels of parallelism. Current state-of-the-art simulations are routinely performed on hundreds of thousands of processing elements (PEs). At an extreme scale, it is observed that data movement and its synchronization pose a bottleneck in the scalability of solvers. Recently, an asynchronous computing method that relaxes communication synchronization at a mathematical level has shown significant promise in improving the scalability of PDE solvers. In this method, communication synchronization between PEs due to halo exchanges is relaxed, and computations proceed regardless of communication status. It was shown that numerical accuracy of standard schemes like the finite-differences, implemented with relaxed communication synchronization, is significantly affected. Subsequently, new asynchrony-tolerant schemes were developed to compute accurate solutions and show good scalability. In this talk, an overview of the status of the asynchronous computing method for PDE solvers and its applicability towards exascale simulations will be presented. The relaxation of data synchronization at a mathematical level can further leverage asynchronous parallel communication and runtime models. The coupling of asynchrony-tolerant schemes with such models will be discussed. Author Bio: Aditya Konduri works as an Assistant Professor in the Department of Computational and Data Sciences, Indian Institute of Science, Bengaluru. Prior to this, he was a Postdoctoral Researcher at the Combustion Research Facility, Sandia National Laboratories, USA. His current research includes large scale simulations of turbulent combustion relevant to gas turbine and scramjet engines, design of machine learning methods for anomalous/extreme event detection in scientific phenomena, and development of scalable asynchronous numerical methods and simulation algorithms for solving partial differential equations on massively parallel computing systems. Aditya completed PhD from Texas A&M University. |

| November 24 at 11:00 | |

Nagaiah Chamakuri IISER Trivandrum | Challenges for large scale simulation of cardiac electrophysiology The bidomain equations form the state-of-the-art model of cardiac electrophysiology and describe normal or pathological propagation of the excitation wave through cardiac tissue. The bidomain model consists of a system of elliptic partial differential equations coupled with a non-linear parabolic equation of reaction-diffusion type, where the reaction term, modeling ionic transport, is described by a set of ordinary differential equations. Since the ionic currents are described by ODEs in the tissue, the PDE part dominates the solving effort. Thus, it is not clear if commonly used splitting methods can outperform a coupled approach by maintaining good accuracy. In the first part, the results will be presented based on a comparison of the coupled solver approach with commonly used splitting methods to solve more sophisticated physiological models. In this regard, the novel memory-efficient computational technique will be demonstrated to solve the coupled systems of equations. In the second paper, we address those challenges by combining space-time adaptive discretiza- tion with dynamic load balancing for parallel computing. Author Bio: Nagaiah Chamakuri is a faculty member at IISER Trivandrum. He completed PhD from University of Magdeburg, Germany. He was a Senior Research Scientist at Max-Delbrück center, Berlin, Germany. |

| November 24 at 11:30 | |

Soumyendu Raha IISc Bangalore | Sparsification of Reaction-Diffusion Dynamical Systems in complex networks A data-driven approach for the sparsification of reaction-diffusion dynamical systems in complex networks (rather graphs induced by complex networks) is studied as an inverse problem guided by data representing the flows in the network. Model reduction techniques like proper orthogonal decomposition (POD) are utilized to make the problem computationally feasible. The network sparsification problem is mapped to a data assimilation problem on a reduced order model (ROM) space with constraints targeted at preserving the eigenmodes of the Laplacian matrix under perturbations. Approximations are proposed to the eigenvalues and eigenvectors of the Laplacian matrix subject to perturbations for computational feasibility, and a custom function is included based on these approximations as a constraint on the data assimilation framework. The resulting eigenvalues are analyzed as pseudo eigenvalues to the perturbed Laplacian matrix. As a use case, this framework has been used to sparsify neural ODENets Author Bio: Soumyendu Raha is a professor at CDS, IISc. He completed PhD from the University of Minnesota. Prior to joining IISc, he has taught at UCSB and NDSU. He has also worked in Cray and IBM. |

| November 24 at 12:00 | |

Sathish Vadhiyar IISc Bangalore | Pipelined Preconditioned Conjugate Gradient Methods for Distributed Memory Architectures As HPC has entered the Exascale computing era, developing asynchronous algorithms, techniques for overlapping computations and communications and minimizing/avoiding global synchronizations become very important to provide high performance and scalability for very large number of computing cores. In this talk, I will cover our recent work on developing piplelined preconditioned Conjugate Gradient (CG) methods for distibuted memory systems based on the above-mentioned principles for exascale computing. Traditional CG algorithm has costly allreduce operations for dot products that involve global synchronization and the subsequent operations including SpMV wait for the results of these dot products. In our work, we have developed PIPECG-OATI (PIPECG-One Allreduce per Two Iterations) which reduces the number of allreduces from three per iteration to one per two iterations and overlaps it with two PCs and two SPMVs. For better scalability with more overlapping, we also developed the Pipelined s-step CG method which reduces the number of allreduces to one per s iterations and overlaps it with s PCs and s SPMVs. We compared our methods with state-of-art CG variants on a variety of platforms and demonstrated that our method gives 2.15x - 3x speedup over the existing methods. We developed communication overlapping CG variants for GPU accelerated nodes, where we proposed and implemented three hybrid CPU-GPU execution strategies for the PIPECG method. Our experiments on GPUs showed that our methods give 1.45x - 3x average speedup over existing CPU and GPU-based implementations. Author Bio: Sathish Vadhiyar is Professor in the Department of Computational and Data Sciences and Chair of Supercomputer Education and Research Centre, Indian Institute of Science. He obtained his B.E. degree in the Department of Computer Science and Engineering at Thiagarajar College of Engineering, India in 1997 and received his Masters degree in Computer Science at Clemson University, USA in 1999. He graduated with a PhD in the Computer Science Department at University of Tennessee, USA in 2003. His research areas are in HPC application frameworks including multi-node and multi-device programming models and runtime strategies for irregular applications including graph applications and AMR applications, performance characterization and scalability studies, processor allocation, mapping and remapping for large scale executions, middleware for production supercomputer systems, and fault tolerance for parallel applications. He has also worked with applications in climate science and visualization in collaboration with researchers working in these areas. Dr. Vadhiyar is a senior member of IEEE, professional member of ACM, and has published papers in peer-reviewed journals and conferences. He was the program co-chair of HPC area in HiPC 2022, chair of senior member award committee of ACM, was an associate editor of IEEE TPDS, and served on the program committees of conferences related to parallel and grid computing including IPDPS, IEEE Cluster, CCGrid, ICPP, eScience and HiPC. Dr Vadhiyar is also an investigator in the National Supercomputing Mission (NSM), a flagship project to create HPC ecosystem in India, where he manages the R&D projects related to HPC in the country. |

| Lunch Break on November 24 at 13:00 | |

| November 24 at 14:00 | |

Deepak Subramani IISc Bangalore | Onboard routing of autonomous underwater vehicles: From PDEs to Deep Learning Intelligent onboard optimal routing is essential for the efficient use of autonomous marine platforms in a variety of scientific, security and humanitarian applications. In this context, we first develop a CPU-based dynamically orthogonal PDE for optimal routing. Next, we develop a GPU accelerated dynamic programming solver for stochastic environments and finally we show how the expert trajectories generated by the previous two exact solutions can be used for developing a transformer-based foundational neural model for onboard routing. Author Bio: Dr. Deepak Subramani is an Assistant Professor in the Dept. of Computational and Data Sciences at the Indian Institute of Science (IISc) in Bangalore. He obtained his Ph.D. in Mechanical Engineering and Computation from the Massachusetts Institute of Technology (MIT), USA, and B.Tech in Mechanical Engineering from IIT Madras. He works in developing AI/ML solutions for geoscience applications, uncertainty quantification, and optimal routing of autonomous vehicles. He is an expert in data-driven modeling, deep learning, scientific machine learning and scientific computing. He has won several awards throughout his career, including the IISc Award for Excellence in Teaching, Arcot Ramachandran Young Investigator Award, INSPIRE Faculty award, de Florez research award at MIT, SNAME award, GE Foundation Leader Scholar Award, and National Talent Search. He has more than 40 peer-reviewed publications in top journals and international conferences. |

| November 24 at 14:30 | |

Ratikanta Behera IISc Bangalore | Tensor Computations with Applications In the era of BIG data, artificial intelligence, and machine learning, there is a need to process multiway (tensor-shaped) data. These data are mainly in the three- or higher-order dimensions, whose orders of magnitude can reach billions. Large volumes of multidimensional data are a great challenge for processing and analyzing; the matrix representation of data analysis is not sufficient to represent all the information content of multiway data in different fields. In this talk, we discuss a closed multiplication operation between tensors with the concepts of transpose, inverse, and the identity of a tensor. We then discuss the application of tensor factorization to color imaging problems. Author Bio: Ratikanta Behera is a faculty member at CDS, IISc. He completed PhD from IIT Delhi. Prior to joining IISc, he was a faculty member at IISER Kolkata. His research interests are Tensor Decompositions, Neural Networks, Numerical Linear Algebra, Generalized Inverses of Tensors, Wavelets in Scientific Computing, High-Performance Computing. |

| November 24 at 15:00 | |

Jim Thomas TIFR | Modeling fluid dynmaics of the world's oceans Author Bio: Jim Thomas is a faculty member at International Centre for Theoretical Sciences, Tata Institute of Fundamental Research and Centre for Applicable Mathematics, Tata Institute of Fundamental Research. He received PhD in Mathematics and Atmosphere Ocean Science from the Courant Institute of Mathematical Sciences, New York University. Oliver Buhler and Shafer Smith were his PhD advisors. His research focusses on understanding fluid dynamics of the world’s oceans. He uses a combination of applied mathematical techniques, idealized mathematical models, and scale-specific numerical integration of governing equations to understand intricate details of oceanic flows. |

| November 24 at 15:30 | |

Phani Sudheer Motamarri IISc Bangalore | Towards fast and accurate quantum modeling of materials on extreme-scale architectures for accelerated materials discovery Quantum-mechanical modeling of materials has played a significant role in determining a wide variety of material properties over the past few decades. In particular, Kohn-Sham density functional theory (DFT) calculations, involving the computation of self-consistent solution of a non-linear eigenvalue problem, have been instrumental in providing many crucial insights into materials behavior, and occupy a sizable fraction of world's computational resources today. However, the stringent accuracy requirements required to compute meaningful material properties, in conjunction with the asymptotic cubic-scaling computational complexity of the underlying eigenvalue problem, demand enormous computational resources for accurate DFT calculations. Thus, these calculations are routinely limited to material systems with at most a few thousands of electrons. In this talk, recent advances in the state-of-the-art will be discussed, enabling fast and accurate large-scale DFT calculations -via- the development of DFT-FE, a massively parallel open-source finite-element (FE) based DFT code on hybrid CPU-GPU architectures. DFT-FE employs adaptive FE discretization alongside novel HPC centric numerical strategies based on mixed precision arithmetic that significantly reduce the data movement costs and increase arithmetic intensity on evolving hybrid CPU-GPU architectures. The talk will also highlight some of the ongoing work at IISc on further accelerating DFT-FE calculations using projector-augmented formalism and methods to incorporate non-collinear magnetism with spin-orbit coupling effects in DFT-FE calculations. Subsequently, ongoing work on matrix-free approaches to accelerate FE sparse matrix-multivector multiplications arising in iterative eigensolvers on multinode CPU-GPU architectures will be discussed. Finally, the loop will be closed by discussing some of the recent developments in AI/ML frameworks that can help accelerate materials discovery. These recent advances have wide-ranging implications for tackling critical scientific and technological problems including, designing new catalytic materials for clean fuel production, better materials for energy storage, devising materials and mechanisms for carbon-di-oxide sequestration, discovering novel qubit materials for quantum computers, to name a few. Author Bio: Phani Motamarri is an Assistant Professor at the Department of Computational and Data Sciences (CDS), IISc Bangalore, from Dec 2019. Prior to this, he was a research faculty member at the University of Michigan, Ann Arbor, USA, where he received his PhD from the Department of Mechanical Engineering, working at the intersection of computational materials physics and scientific computing. His PhD work received the Robert J Melosh Medal for the best PhD student paper in finite-element methods awarded by the International Association of Computational Mechanics (IACM). His primary research interests include developing mathematical techniques and HPC-centric computational algorithms that can leverage extreme-scale architectures for quantum modelling of materials, and furthermore, harnessing these capabilities to address challenging material modeling problems in key scientific areas. His current recent interests also include exploring lightweight graph neural network-based ML approaches for accelerated materials discovery. He is also one of the lead developers of DFT-FE --- an open-source code for massively parallel DFT calculations that got nominated as a finalist for the 2019 ACM Gordon Bell Prize, the prestigious prize in Scientific computing. He also received NSM exascale for R&D grant award. Phani Motamarri is also part of the international team that recently won the ACM Gordon Bell Prize in 2023, marking the first time a research group from India that has been a part of this prestigious accolade. |

| November 24 at 16:00 | |

Kandappan Shiv Nadar University Chennai | Hierarchical low-rank structures on distributed systems Large dense matrices are frequently encountered in a wide range of scientific and engineering problems. For a dense matrix of size NxN, both space complexity and computational complexity to perform a matrix-vector product scale quadratically with N. As a result, naive matrix-vector products are impractical. However, specific classes of dense matrices that arise in N-body problems, such as radial basis function interpolation, scattering problems, etc., possess a desirable property: their off-diagonal submatrices are numerically low-rank. This property helps reduce the space complexity (to store these matrices) and computational complexity to perform a matrix-vector product. Such matrices are termed as Hierarchical Matrices or H-matrices. In this talk, we will discuss a class of H-matrix known as HODLR3D and how well it scales in distributed memory systems. Author Bio: Dr. V.A. Kandappan is an Assistant Professor in the Department of Computer Science at Shiv Nadar University Chennai. He has a well-rounded academic background, having completed his Bachelor's degree in Electrical and Electronics Engineering, a Master's degree in Power Systems Engineering from Anna University, and a PhD in Computational Science from IIT Madras. Before his PhD, he worked as a Graduate Engineer at IBM India Pvt. Ltd., specializing in Software Testing Automation. His current research focuses on developing fast matrix algorithms for scientific computing applications, with a particular interest in high-performance computing and machine learning. |