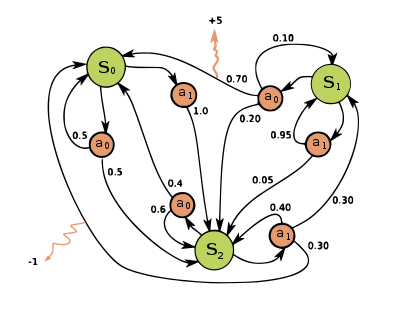

While the combination of deep learning and reinforcement learning has led to tremendous success, there is not much work tying together reinforcement learning, skill acquisition, memory and attention on a deep neural network substrate. We have had some success in this direction and are continuing to expand the horizon of possibilities. Our work on Fine Grain Action Repetition that allows an agent to learn the right actions, as well as right persistence, gives a way of improving the performance of any deep reinforcement learning algorithm. Recently, it received a mention during a keynote at a top conference as a useful deep RL technique to speed up learning. Our work on Robust Deep Reinforcement Learning has garnered nearly 100 citations since it appeared in 2017.

One of the tangential directions of research this has engendered is that of application of deep learning and selective attention in natural language processing. Attention results in a more meaningful representation of text appropriate for several natural language tasks. I am currently exploring the role of reinforcement learning in attention mechanisms for several NLP tasks, such as question answering, dialogue, etc. One such work was recently accepted to EMNLP 2019. The goal is to develop another cognitive module for a truly autonomous agent. We have established one of the most active deep learning groups in India. Our work on joint representation learning is widely cited. Our work on Bridge Correlational networks was mentioned in an article on the top 15 breakthroughs of AI in 2015 by the Future of Life Institute.